- Published on

LangGraph vs LlamaIndex Showdown: Who Makes AI Agents Easier in JavaScript?

- Authors

- Name

- Ali Ibrahim

Short on time?

Get the key takeaways in under 10 seconds with DocuMentor AI, my Chrome extension. No account required.

Try it here →

Introduction

In the last year, the AI landscape has rapidly evolved. We've moved beyond static prompt engineering into building AI agents, autonomous systems capable of reasoning, taking actions, using tools, and adapting to complex goals. These agents represent a major leap from traditional AI applications, requiring not just LLMs but orchestration, memory, and structured workflows.

Most of this innovation has happened in the Python ecosystem, with tools like LangChain, AutoGen, and Semantic Kernel leading the way. But as JavaScript and TypeScript continue to dominate full-stack development, there’s a growing need for agent frameworks built natively for JS, without forcing developers to switch stacks or work around language mismatches.

In this article, we’ll explore what makes an AI agent framework different from a typical AI library, then take a closer look at two of the most capable JS-native options: LangGraph.js and LlamaIndex.TS.

Why We Need Agent Frameworks (Not Just AI Frameworks)

Most AI frameworks were designed to simplify integration with model providers. They enabled model switching, managing basic message history, and offering limited features. But building AI agents introduces a different level of complexity. Agents need to plan before acting, manage short-term and long-term memory, coordinate tools, handle long-running tasks, and often involve human feedback. This level of orchestration requires a new kind of framework, one built specifically for agent workflows.

LlamaIndex

LlamaIndex began as a Python toolkit for connecting LLMs to structured and unstructured data. As interest in JavaScript-native tooling grew, the team released LlamaIndex.TS, a TypeScript SDK built from the ground up to bring those same capabilities to modern JS environments like Node.js and Next.js.

Enjoying content like this? Sign up for Agent Briefings - insights on building and scaling AI agents.

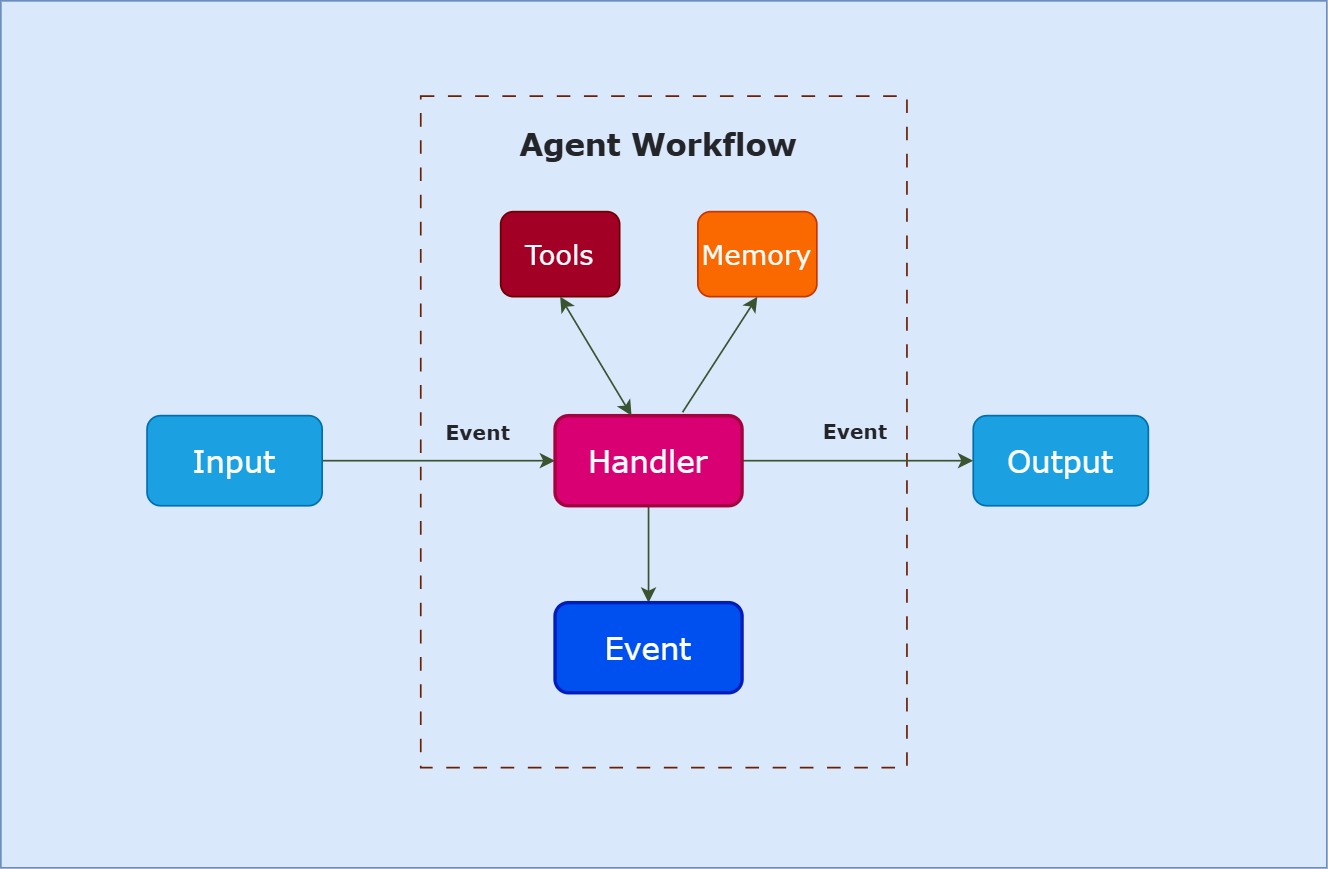

Architecture of agents in LlamaIndex

The legacy of bringing LLM capabilities into data pipelines still reflects in features like query engines, node postprocessors, and chat engines. But when it comes to building agents, LlamaIndex introduces a new layer of control through its workflow system, allowing developers to structure logic, memory, and tool use using event-based patterns.

How Agent Workflows work in LlamaIndex.TS

LlamaIndex agents operate through a sequence of structured events. Each step in the agent’s reasoning process is modeled as an event that triggers a corresponding handler. These handlers are registered within a workflow, and each one is responsible for a specific part of the agent’s behavior—whether it’s interpreting input, invoking a tool, or formatting output. This design gives developers full visibility and control over agent execution, while keeping the architecture modular and composable.

Example of a simple agent Using LlamaIndex

The following code demonstrates a basic agent built with LlamaIndex.TS. It uses OpenAI’s GPT-4.1-mini model. I added the sumNumbers tool, because the agent function requires at least one tool to be passed in the parameters.

Installations:

I'm using pnpm here, but any package manager will work.

pnpm add llamaindex @llamaindex/openai @llamaindex/workflow zod

import { openai } from "@llamaindex/openai";

import { agent } from "@llamaindex/workflow";

import { tool } from "llamaindex";

import { z } from "zod";

const sumNumbers = tool({

name: "sumNumbers",

description: "Use this function to sum two numbers",

parameters: z.object({

a: z.number().describe("The first number"),

b: z.number().describe("The second number"),

}),

execute: ({ a, b }: { a: number; b: number }) => `${a + b}`,

});

const chatAgent = agent({

llm: openai({ model: "gpt-4.1-mini" }),

verbose: false,

systemPrompt: "You are a helpful assistant.",

tools: [sumNumbers] //requires at least one tool

});

export const runAgent = async (message: string) => {

const result = await chatAgent.run(message);

return result;

};

runAgent("What is the capital of France?")

.then(response => {

console.log("Response:", response.data);

});

Console output :

Response: {

result: 'The capital of France is Paris.',

state: {

memory: ChatMemoryBuffer {

chatStore: SimpleChatStore {},

chatStoreKey: 'chat_history',

tokenLimit: 750000

},

scratchpad: [],

currentAgentName: 'Agent',

agents: [ 'Agent' ],

nextAgentName: null

}

}

LlamaIndex Key Features and Tools

- Built-in support for LLMs, custom data loaders, and vector stores

- create-llama package/CLI with templates like Agentic RAG, Code Generator, and Financial Report agents

- LlamaIndex Server and Chat UI for fast API and UI deployment

- Integration with OpenTelemetry and LangTrace for tracing and observability

- Memory, achieved by using chat stores

- Evaluators for correctness, faithfulness, and relevancy

- Support for multi agents, using

multiAgentfunction, etc.

LangGraph

LangGraph was created by the LangChain team to bring more structure and control to agent development. Earlier LangChain agents were often hard to manage, with limited visibility into how steps were executed or tools were used.

LangGraph addresses this challenge by introducing a state-machine model, where each node handles part of the logic, and transitions are explicitly defined. This makes it easier to build multi-step agents with clear.

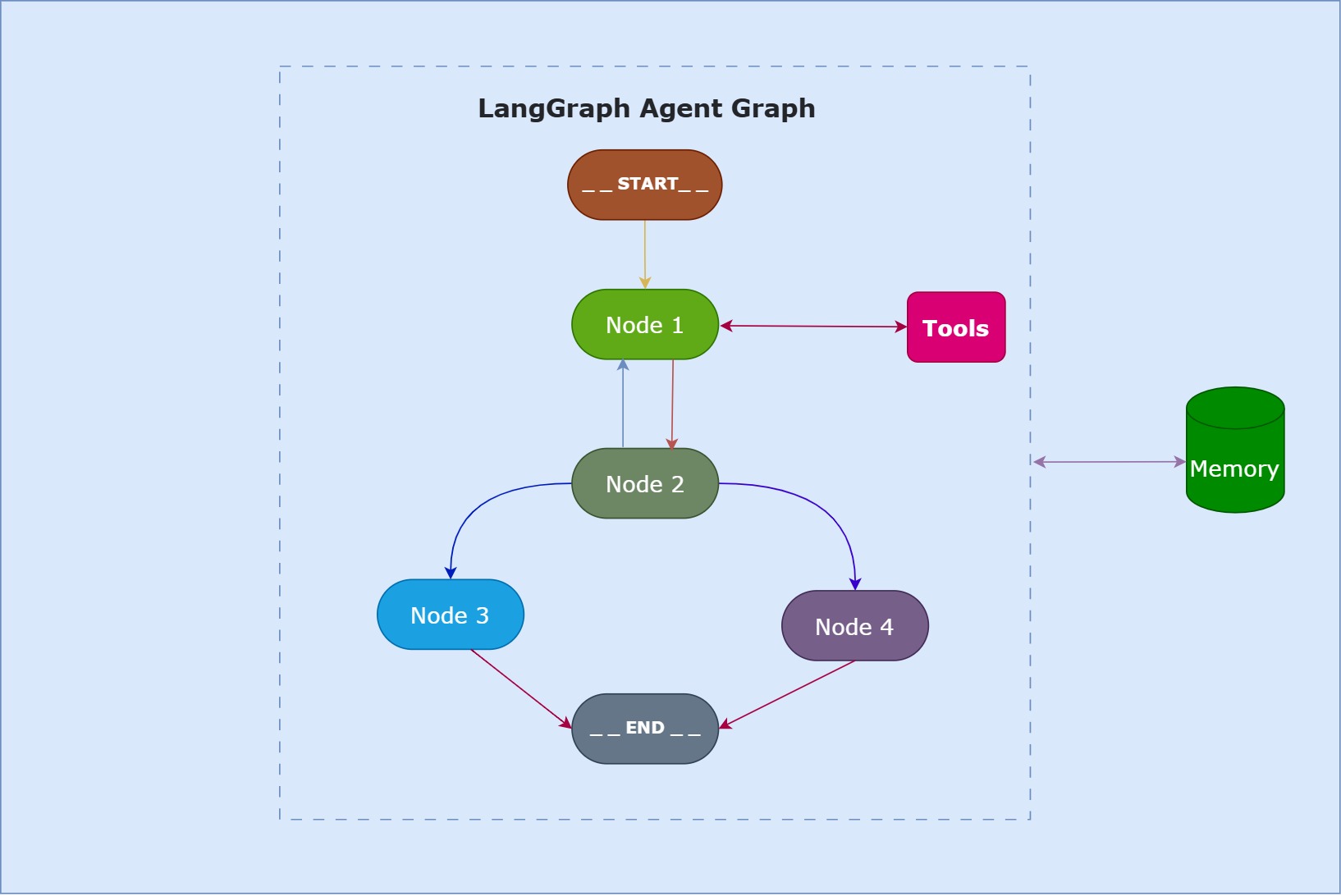

Architecture of LangGraph

LangGraph introduces a state-machine-based architecture for building AI agents. It gives developers full control over execution logic by modeling each agent as a graph of typed functions, where state flows between connected nodes. Below is a breakdown of the key building blocks that power LangGraph.

Nodes, Edges, and State

LangGraph agents are defined using StateGraph, where each node is an async function responsible for reasoning, calling tools, or transforming state. Edges define the control flow between these nodes and can support branching, loops, and conditional transitions.

At the center of this system is a mutable state object. It carries user input, tool responses, memory, and intermediate values throughout the graph. The state is explicitly defined and updated at each step, allowing developers to trace and test agent behavior predictably.

Advanced Execution Concepts

LangGraph includes built-in support for persistence and breakpoints, enabling powerful control over agent workflows:

- Persistence: With checkpointers, LangGraph can store and reload graph state across sessions. This allows for long-running agents, fault-tolerant execution, and features like time travel or session replay.

- Breakpoints and Human-in-the-Loop: Developers can pause execution at specific nodes, wait for user input or approval, and then resume, making LangGraph suitable for decision-critical workflows.

These features are part of a broader toolkit that makes LangGraph adaptable for both simple and highly complex agentic applications.

Example 1 : Using LangGraph primitives

This code shows how to build an agent graph using LangGraph's StateGraph. To do this, we define the agent's state, the nodes, and the edges connecting them. This agent does the same thing as the one in the LlamaIndex section, just with a few more lines of code.

pnpm add @langchain/langgraph @langchain/openai

import { Annotation, END, START, StateGraph } from '@langchain/langgraph';

import { ChatOpenAI } from '@langchain/openai';

// The agent state

const graphState = Annotation.Root({

message: Annotation<string>,

response: Annotation<string>

});

// function for the only node of the graph

const callModel = async (state: typeof graphState.State) => {

const chatModel = new ChatOpenAI({

model: 'gpt-4o',

// apiKey: "YOUR_API_KEY" keep this commented if you already exported OPENAI_API_KEY globally

});

const messages = [

{ role: 'system', content: 'You are a helpful assistant.' },

{ role: 'user', content: state.message }

];

const response = await chatModel.invoke(messages);

return { response: response.content };

}

// Create the agent's graph

const graph = new StateGraph(graphState);

graph.addNode('callModel', callModel)

.addEdge(START, 'callModel')

.addEdge('callModel', END);

// Compile the graph to the actual agent

const agent = graph.compile();

export const runAgent = async (message: string) => {

const result = await agent.invoke({

message: message

});

return result;

}

runAgent('What is the capital of France?')

.then(response => {

console.log('Response:', response);

});

The output looks like :

Response: {

message: 'What is the capital of France?',

response: 'The capital of France is Paris.'

}

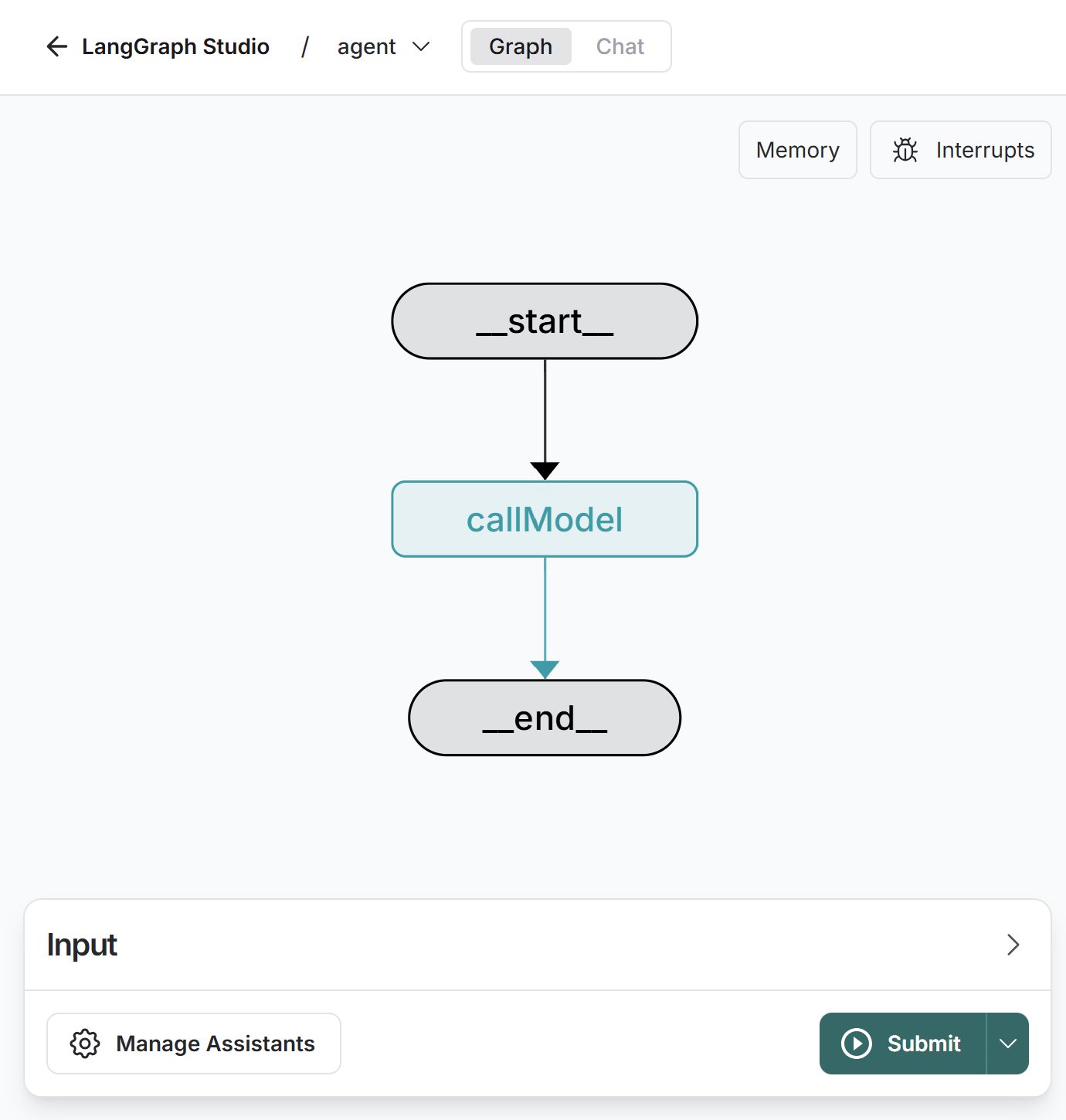

LangGraph Studio

langgraph.json using LangGraph cli we can access the agent in LangGraph Studio. Where we can chat with our agent and inspect its state.

We can also use a prebuilt LangGraph agent to create a similar one to the agent we built using primitives.

import { createReactAgent } from "@langchain/langgraph/prebuilt";

import { ChatOpenAI } from "@langchain/openai";

// Using the createReactAgent function to create a simple agent

const agent = createReactAgent({

llm: new ChatOpenAI({

model: 'gpt-4o',

//apiKey: "YOUR_API_KEY"

}),

prompt: "You are a helpful assistant.",

tools: [],

});

export const runAgent = async (message: string) => {

const result = await agent.invoke({

messages: [{ role: 'user', content: message }],

});

return result;

};

runAgent('What is the capital of France?')

.then(response => {

const message = response.messages?.[response.messages.length - 1];

console.log('Response:', message?.content);

});

Output:

Response: The capital of France is Paris.

Key Features of LangGraph.js

- Built-in support for building ReAct, Swarm, and other agent patterns

- Support for open-source agent evaluation via agentevals, including createTrajectoryMatchEvaluator, LLM-as-judge, and more

- LangGraph Server for deploying graph-based agents as APIs

- LangGraph Studio and prebuilt Chat UI for local testing and debugging

- Support for community agents and agent templates

- Native integration with LangChain and LangSmith for tool management, observability, and tracing

Comparison Table

With both frameworks outlined, let’s directly compare their strengths and trade-offs.

| Criteria | LlamaIndex.TS (as of june 9, 2025) | LangGraph.js (as of june 9, 2025) |

|---|---|---|

| GitHub Stars | ~2.7k ⭐ | ~1.6k ⭐ |

| NPM Weekly Downloads | ~60k | ~377k |

| Tooling Support | Data loaders, vector stores, workflows, CLI, chat UI | CLI, LangGraph Server, Studio, agent templates |

| Learning Curve | Moderate — event-driven, data-focused patterns | Steeper — graph modeling, state control, custom logic |

| Planning / Routing | Workflows + event handlers for tool and query flows | Explicit node → edge graphs, conditionals, state-driven |

| Integration Ecosystem | Strong in RAG, vector DBs, file readers, MCP, etc. | LangChain, LangSmith, Open Agents, MCP, community |

| TS/Node.js Compatibility | Full support (Node, Deno, Bun, Cloudflare, Next.js) | Native TS SDK, LangGraph SDK, etc. |

| Deployment Flexibility | LlamaIndex Server (Next.js, FastAPI), CLI scaffolding | LangGraph Platform, bundler, edge-ready, prebuilt UIs |

Which should you choose ?

If you're looking for something fast to learn, more focused on RAG use cases, or a framework that abstracts away a lot of complexity, I recommend LlamaIndex.TS— especially if you're building a full-stack app with Next.js. However, at the time of writing this article, be aware that the documentation is still maturing. While many functions are listed in the API reference, actual implementation examples or usage guides are not always available.

On the other hand, if you want more control over your agent and are looking for a more general-purpose framework—not limited to RAG, LangGraph.js is a great option, especially when paired with LangChain.js. One of the key advantages of LangGraph is that it's not tightly coupled to LangChain, meaning you can use it independently or integrate it with other AI frameworks. This makes it ideal for structuring complex agent logic with flexibility.

Conclusion

LlamaIndex.TS and LangGraph.js serve different needs in the JavaScript AI ecosystem. Your choice depends on whether you prefer speed and simplicity—or control and flexibility. Both are evolving rapidly, so the best tool is the one that aligns with your current goals and stack.

Curious to see these frameworks in action? Check out the follow-up article: Building a Full-Stack AI Agent App with LangGraph.js & NestJS.

Have thoughts or questions about agent frameworks in JavaScript? Let’s connect and discuss:

Further Reading

GitHub Repositories

Agent Briefings

Level up your agent-building skills with weekly deep dives on MCP, prompting, tools, and production patterns.