- Published on

Run Any MCP Server Locally with Docker’s MCP Catalog and Toolkit

- Authors

- Name

- Ali Ibrahim

Introduction

Docker isn't just for containers anymore, it's now at the heart of how AI tools are run, discovered, and integrated.

After launching the Docker Model Runner, Docker introduced another powerful piece: the MCP Catalog and Toolkit. These tools make it possible to spin up AI ready services like GitHub, Stripe, or browser automation tools in seconds, securely and locally, all through containers.

At the core of this system is the Model Context Protocol (MCP), a new open standard originally introduced by Anthropic and now supported by major AI players including Docker, OpenAI, and Google. MCP defines how large language models can discover, describe, and invoke external tools. It solves a critical pain point: connecting LLMs to real-world actions without writing custom logic for each use case.

In this article, you'll learn:

- What the MCP Catalog and Toolkit do

- How to run your first MCP server using Docker Desktop

- How to integrate it with OpenAI's TypeScript SDK to build a local, functional agent

What is the MCP Catalog?

The MCP Catalog is a curated registry of containerized MCP Servers. These servers can be anything from API wrappers (like GitHub or Stripe) to system utilities (like Puppeteer or DuckDuckGo), and they're packaged as secure, portable Docker images.

The catalog solves real pain points that come with integrating tools into AI systems:

- No environment clashes : each server runs in isolation inside its own container.

- No manual setup : tools are preconfigured and ready to run with one click.

- No OS-specific weirdness : everything runs the same way, everywhere.

You can browse and launch these servers directly from Docker Desktop, or pull them via the CLI. Each listing includes metadata, versioning, and example configs to help you integrate the MCP Server into your agent workflows.

In short: the MCP Catalog gives you a plug-and-play toolbox for building smarter agents, minus the infrastructure headaches.

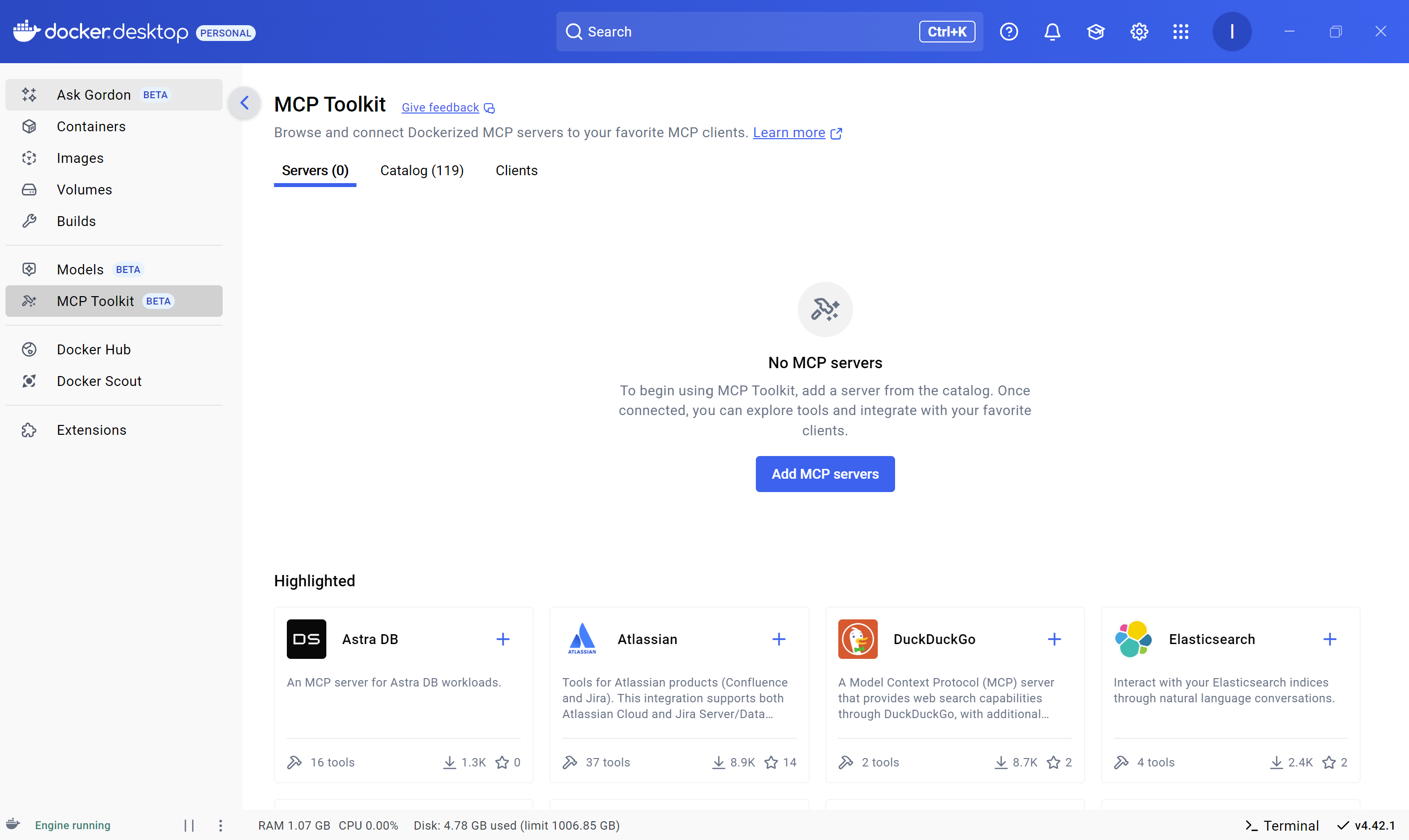

What is the MCP Toolkit?

If the MCP Catalog gives you the servers, the MCP Toolkit gives you the control panel.

It’s a built-in feature of Docker Desktop that helps you:

- Discover and launch MCP Servers

- Run them as containers with secure defaults

- Connect them to your favorite AI clients (like Claude, Cursor, or your own custom app)

Under the hood, the toolkit acts as a gateway between AI models (called “MCP clients”) and the MCP servers you’ve installed. It removes the friction of integration, turning what used to be days of setup into a few clicks.

Whether you're running a GitHub wrapper, doing web searches via DuckDuckGo, the Toolkit handles all the complexity: container orchestration, isolation, routing, and security.

In short: it lets you go from "I want to call this API" to "My agent just used it".

Security

When you’re running tools that can access APIs, files, or the internet, security isn’t optional, especially if your AI agents are calling them automatically.

The Docker MCP Toolkit provides several layers of protection, both at the image level and during runtime:

Image level Security

- All MCP servers in the

mcp/namespace are built and signed by Docker. - Each image includes a Software Bill of Materials (SBOM), so you know exactly what’s inside before you run it.

Runtime Isolation

Every MCP server runs in a locked-down container:

- CPU & memory limits: Containers are capped at 1 CPU and 2 GB RAM to prevent abuse or runaway processes.

- Filesystem safety: Tools can’t touch your host system unless you explicitly grant them access.

- Secrets protection: Requests containing sensitive data are automatically intercepted and blocked.

Together, these controls help you confidently run and test tools locally, without putting your system, tokens, or data at risk.

How to Use the MCP Toolkit

The MCP Toolkit in Docker Desktop makes it easy to go from "I want to use this tool" to "My agent can call it."

Here’s the high level flow:

- Enable the Toolkit

- Install an MCP server (e.g., GitHub or DuckDuckGo)

- (Optional) Configure it with API keys

- Connect an MCP client (e.g., Claude, Cursor, or your own agent)

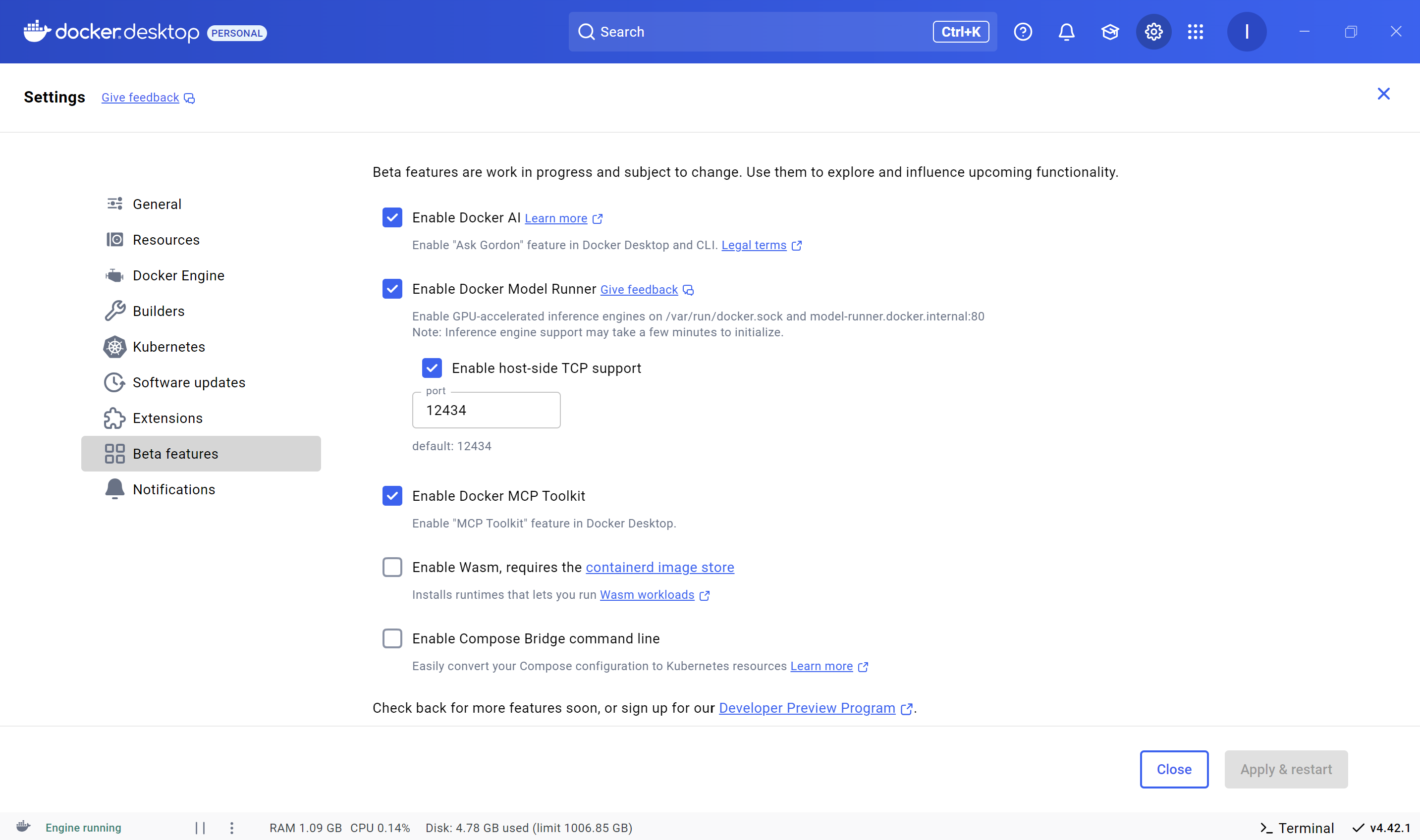

Step 1: Enable Docker MCP Toolkit

First, make sure you’re on Docker Desktop 4.42+ (Windows) or 4.40+ (macOS).

- Open Docker Desktop settings

- Go to Beta features

- Enable Docker MCP Toolkit

- Click Apply & Restart

This activates the Catalog, the MCP gateway, and client-server integration features.

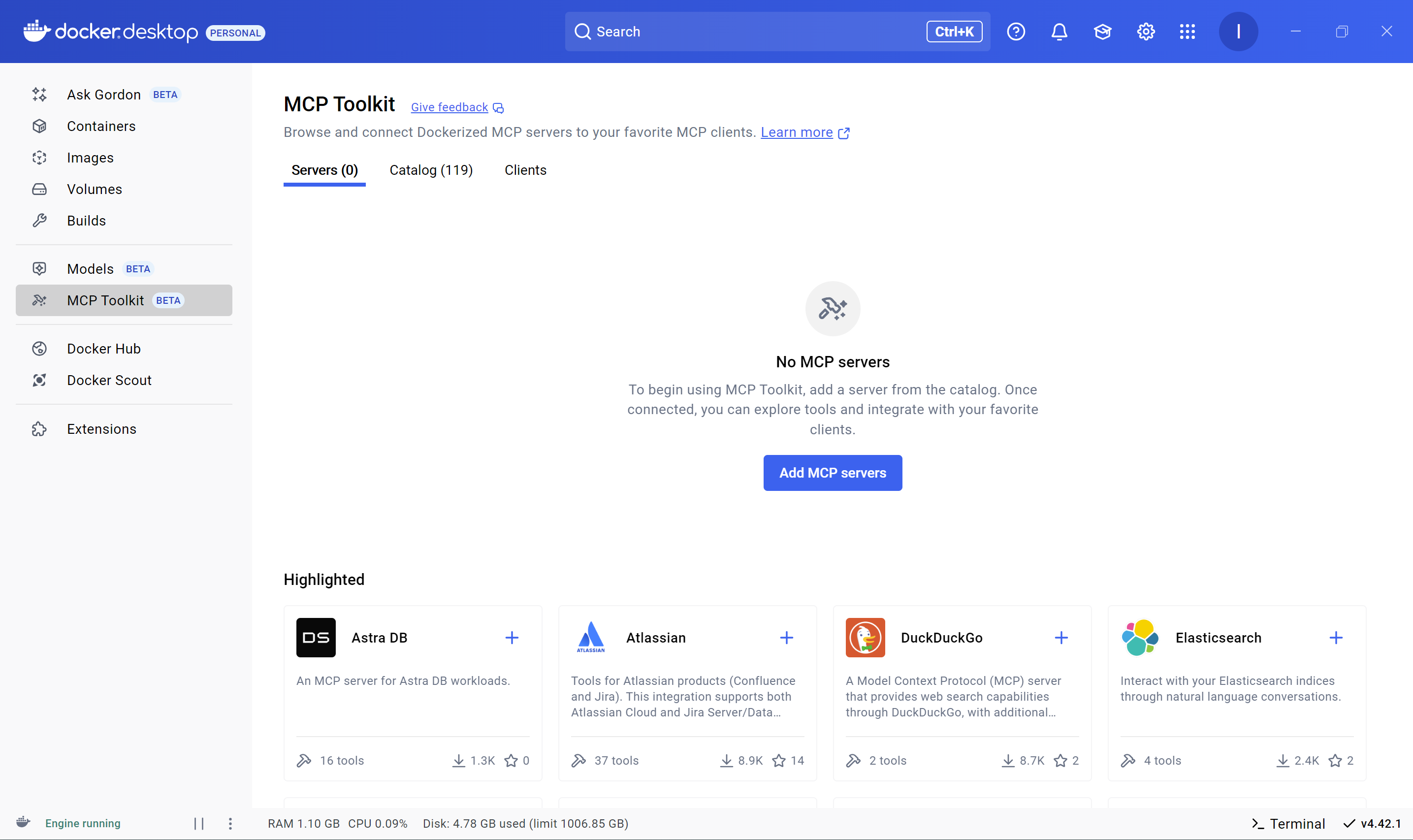

Step 2: Install an MCP Server

- In Docker Desktop, go to MCP Toolkit → Catalog

- Browse the list of available tools

- Click the plus icon to install the server you want

- (Optional) Some tools need extra config, see the Config tab for instructions

Example: GitHub MCP Server

- Find and install the GitHub MCP server in the Catalog

- In the Config tab, enter your GitHub personal access token (needed to access the GitHub API)

You’ll now see GitHub listed in your Servers tab.

You can even use GitHub tools directly inside Docker’s built-in assistant Ask Gordon.

Step 3 : Add an MCP Client

Clients are AI frontends (like Claude Desktop, Cursor, or your own agent) that connect to the MCP gateway and invoke tools.

To connect one:

- Go to MCP Toolkit → Clients

- Select a supported client

- Click Connect

You can also build your own client (e.g., using OpenAI's SDK + docker mcp gateway run). We’ll cover that in the next section.

Once everything is connected, your local agent setup becomes powerful: any client can securely call any installed server, all isolated inside containers and coordinated by Docker.

Demo: Docker MCP Toolkit + OpenAI SDK (TypeScript)

In this demo, we'll use openai and DuckDuckGo MCP server from the MCP catalog to build a simple agent that can answer questions by doing a web search using DuckDuckGo. To that we need to enable the DuckDuckGo mcp server on docker desktop:

MCP Catalog and Toolkit Setup

- Open Docker Desktop and navigate to the MCP Catalog tab.

- Enable the DuckDuckGo MCP server by clicking the + button.

Note : DuckDuckGo does not require any API Key for other servers you may need to provide an API key, or additional configuration.

Setup

# Create project directory

mkdir docker-mcp-catalog-demo

cd docker-mcp-catalog-demo

# Initialize project

pnpm init # or npm init -y

pnpm install openai @modelcontextprotocol/sdk

package.json

{

"name": "docker-mcp-catalog-demo",

"version": "1.0.0",

"type": "module",

"scripts": {

"start": "tsx src/main.ts"

},

"dependencies": {

"@modelcontextprotocol/sdk": "^1.13.2",

"openai": "^5.6.0",

"tsx": "^4.20.3"

}

}

tsconfig.json

{

"compilerOptions": {

"target": "ES2022",

"module": "ESNext",

"moduleResolution": "node",

"esModuleInterop": true,

"strict": true,

"skipLibCheck": true,

"outDir": "dist"

},

"include": ["src/**/*"],

"exclude": ["node_modules"]

}

Create Entry File

# On Linux or macOS

mkdir src && touch src/main.ts

# On Windows

mkdir src ; New-Item -Path src -Name main.ts

Add imports in src/main.ts

import OpenAI from 'openai'

import { Client } from '@modelcontextprotocol/sdk/client/index.js'

import { StdioClientTransport } from '@modelcontextprotocol/sdk/client/stdio.js'

import { Tool } from '@modelcontextprotocol/sdk/types'

Initialize clients src/main.ts

const openAIClient = new OpenAI()

const mcpClient = new Client(

{

name: 'client-mcp',

version: '1.0.0',

},

{

capabilities: {

prompts: {},

resources: {},

tools: {},

},

}

)

const mcpTransport = new StdioClientTransport({

command: 'docker',

args: ['mcp', 'gateway', 'run'],

env: {

LOCALAPPDATA: 'C:\\Users\\{YOUR_WINDOWS_USER_NAME}\\AppData\\Local', // This depends on your OS

ProgramFiles: 'C:\\Program Files', // This depends on your OS

},

})

In this demo, we’re building a custom MCP client using the MCP TypeScript SDK. To connect, we use the docker mcp gateway run command through the StdioClientTransport.

On Windows, this requires setting two environment variables: LOCALAPPDATA and ProgramFiles. These paths are needed for Docker to locate the necessary configuration needed to connect to an MCP server.

I discovered this by reverse-engineering how Claude Desktop connects to Docker. Once the client was linked, I inspected the config file Claude stores locally and copied the values Docker added for the connection.

This approach may vary depending on your OS, Linux and macOS users may not need to set these or might require different values.

Create utilities functions src/main.ts

async function listTools() {

const response = await mcpClient.listTools()

return response.tools

}

function toOpenAITools(tools: Tool[]) {

return tools.map((tool: Tool) => ({

type: 'function',

function: {

name: tool.name,

description: tool.description,

parameters: { ...tool.inputSchema },

strict: false,

},

}))

}

Create the call tool function src/main.ts

async function callTool(messages: any[], completionWithTools: any, openAITools: any[]) {

const toolCalls = completionWithTools.choices[0].message.tool_calls

if (!toolCalls) {

console.log('No tool calls found in the completion response.')

return

}

const toolCall = toolCalls[0]

const args = JSON.parse(toolCall.function.arguments)

const result = await mcpClient.callTool({

name: toolCall.function.name,

arguments: args,

})

messages.push(completionWithTools.choices[0].message)

messages.push({

role: 'tool',

tool_call_id: toolCall.id,

content: result.content,

})

const completion2 = await openAIClient.chat.completions.create({

messages,

model: 'gpt-4o-mini',

tools: openAITools,

})

console.log(completion2.choices[0].message?.content)

}

Create the main function src/main.ts

async function main() {

await mcpClient.connect(mcpTransport)

const mcpTools = await listTools()

const openAITools = toOpenAITools(mcpTools)

const userMessage = { role: 'user', content: 'What are the latest news in AI as of June 2025' }

const completion = await openAIClient.chat.completions.create({

messages: [userMessage],

model: 'gpt-4o-mini',

tools: openAITools,

})

await callTool([userMessage], completion, openAITools)

await mcpClient.close()

}

main().catch((error) => {

console.error('Error:', error)

})

The complete src/main.ts

import OpenAI from 'openai'

import { Client } from '@modelcontextprotocol/sdk/client/index.js'

import { StdioClientTransport } from '@modelcontextprotocol/sdk/client/stdio.js'

import { Tool } from '@modelcontextprotocol/sdk/types'

const openAIClient = new OpenAI()

const mcpClient = new Client(

{

name: 'client-mcp',

version: '1.0.0',

},

{

capabilities: {

prompts: {},

resources: {},

tools: {},

},

}

)

const mcpTransport = new StdioClientTransport({

command: 'docker',

args: ['mcp', 'gateway', 'run'],

env: {

LOCALAPPDATA: 'C:\\Users\\{YOUR_WINDOWS_USER_NAME}\\AppData\\Local',

ProgramFiles: 'C:\\Program Files',

},

})

async function listTools() {

const response = await mcpClient.listTools()

return response.tools

}

function toOpenAITools(tools: Tool[]) {

return tools.map((tool: Tool) => ({

type: 'function',

function: {

name: tool.name,

description: tool.description,

parameters: { ...tool.inputSchema },

strict: false,

},

}))

}

async function callTool(messages: any[], completionWithTools: any, openAITools: any[]) {

const toolCalls = completionWithTools.choices[0].message.tool_calls

if (!toolCalls) {

console.log('No tool calls found in the completion response.')

return

}

const toolCall = toolCalls[0]

const args = JSON.parse(toolCall.function.arguments)

const result = await mcpClient.callTool({

name: toolCall.function.name,

arguments: args,

})

messages.push(completionWithTools.choices[0].message)

messages.push({

role: 'tool',

tool_call_id: toolCall.id,

content: result.content,

})

const completion2 = await openAIClient.chat.completions.create({

messages,

model: 'gpt-4o-mini',

tools: openAITools,

})

console.log(completion2.choices[0].message?.content)

}

async function main() {

await mcpClient.connect(mcpTransport)

const mcpTools = await listTools()

const openAITools = toOpenAITools(mcpTools)

const userMessage = { role: 'user', content: 'What are the latest news in AI as of June 2025' }

const completion = await openAIClient.chat.completions.create({

messages: [userMessage],

model: 'gpt-4o-mini',

tools: openAITools,

})

await callTool([userMessage], completion, openAITools)

await mcpClient.close()

}

main().catch((error) => {

console.error('Error:', error)

})

Run

pnpm start

Expected Output

Here are some sources where you can find the latest news in AI as of June 2025:

1. **TechCrunch - AI News & Artificial Intelligence**

[Visit TechCrunch](https://techcrunch.com/category/artificial-intelligence/)

TechCrunch reports on the latest in artificial intelligence and machine learning tech, companies involved, and the ethical issues surrounding AI.

2. **Artificial Intelligence News**

[Visit AI News](https://www.artificialintelligence-news.com/)

This site provides insights and reports on the latest trends and developments in the AI industry.

3. **Reuters - AI News**

[Visit Reuters](https://www.reuters.com/technology/artificial-intelligence/)

Reuters covers business, financial, national, and international news, including developments in artificial intelligence.

4. **MIT News - Artificial Intelligence**

[Visit MIT News](https://news.mit.edu/topic/artificial-intelligence2)

5. **AP News - Artificial Intelligence**

[Visit AP News](https://apnews.com/hub/artificial-intelligence)

The Associated Press provides the latest updates on artificial intelligence, including legal cases and significant technological advancements.

You can explore these sources for detailed and up-to-date information on the advancements and discussions in AI.

Conclusion

The Model Context Protocol is reshaping how AI agents interact with the world and with Docker’s MCP Catalog and Toolkit, it's never been easier to bring those capabilities to your local dev environment.

You now have everything you need to:

- Discover and run secure, containerized MCP servers

- Connect them to your AI agents using OpenAI’s SDK

- Prototype real world use cases like API calls and search, all without deploying to the cloud

If you're building AI agents or exploring how to integrate tools into your workflows, check out Agentailor. It’s where I share experiments, tutorials, and tools for developers working on the next generation of AI systems.

Enjoying content like this? Sign up for Agent Briefings insights on building and scaling AI agents.

Let’s connect:

Further Reading

Agent Briefings

Level up your agent-building skills with weekly deep dives on MCP, prompting, tools, and production patterns.