- Published on

How I Built and Deployed a Production-Ready AI SaaS in 14 Days Using Agent Initializr

- Authors

- Name

- Ali Ibrahim

Introduction

I built and deployed a fullstack, multi-tenant AI SaaS product in under two weeks. And the most interesting part is, the AI was the easy part. The real challenge, the thing that usually takes weeks of tedious work, is all the plumbing around it: authentication, streaming, memory, and message queues. This is the story of how I used my own scaffolding tool to skip the boilerplate and go from idea to production.

When I started thinking about the Playground, I didn’t picture a fancy UI or clever branding. I pictured the boring but critical stuff every Conversational AI SaaS needs:

- Multi AI Models support

- Thread Management

- Memory and chat history management

- Streaming responses

- External Tooling Integration

- Message Queuing

That’s the kind of plumbing that eats up weeks, before you even get to iterate over your Agent Behavior.

And I didn’t want to spend weeks. I wanted to go from idea to a working product as quickly as possible, without cutting corners on architecture.

So instead of hand-coding all of that from scratch, I reached for something I’d been quietly building for months: Agent Initializr.

Why This Project Exists ?

The Playground wasn’t meant to be “a product” in the usual sense. It was an experiment.

I wanted to see how far I could push my own scaffolding tool, could it really serve as the foundation for a real, multi-tenant AI SaaS? Not just a demo, but something stable enough for developers to use in testing their own agents.

The goal was simple:

- Let a developer create an AI agent in minutes.

- Let them give it tools — like Slack, GitHub, or Notion — without writing any integration code.

- Let them interact with it via chat or their own API calls.

The constraint was also simple: Move from idea to deployed MVP in the shortest time possible.

If I could build the Playground in under two weeks, with production-grade features, then Agent Initializr wasn’t just a scaffolding tool. It was a launch accelerator.

Generating the Foundation

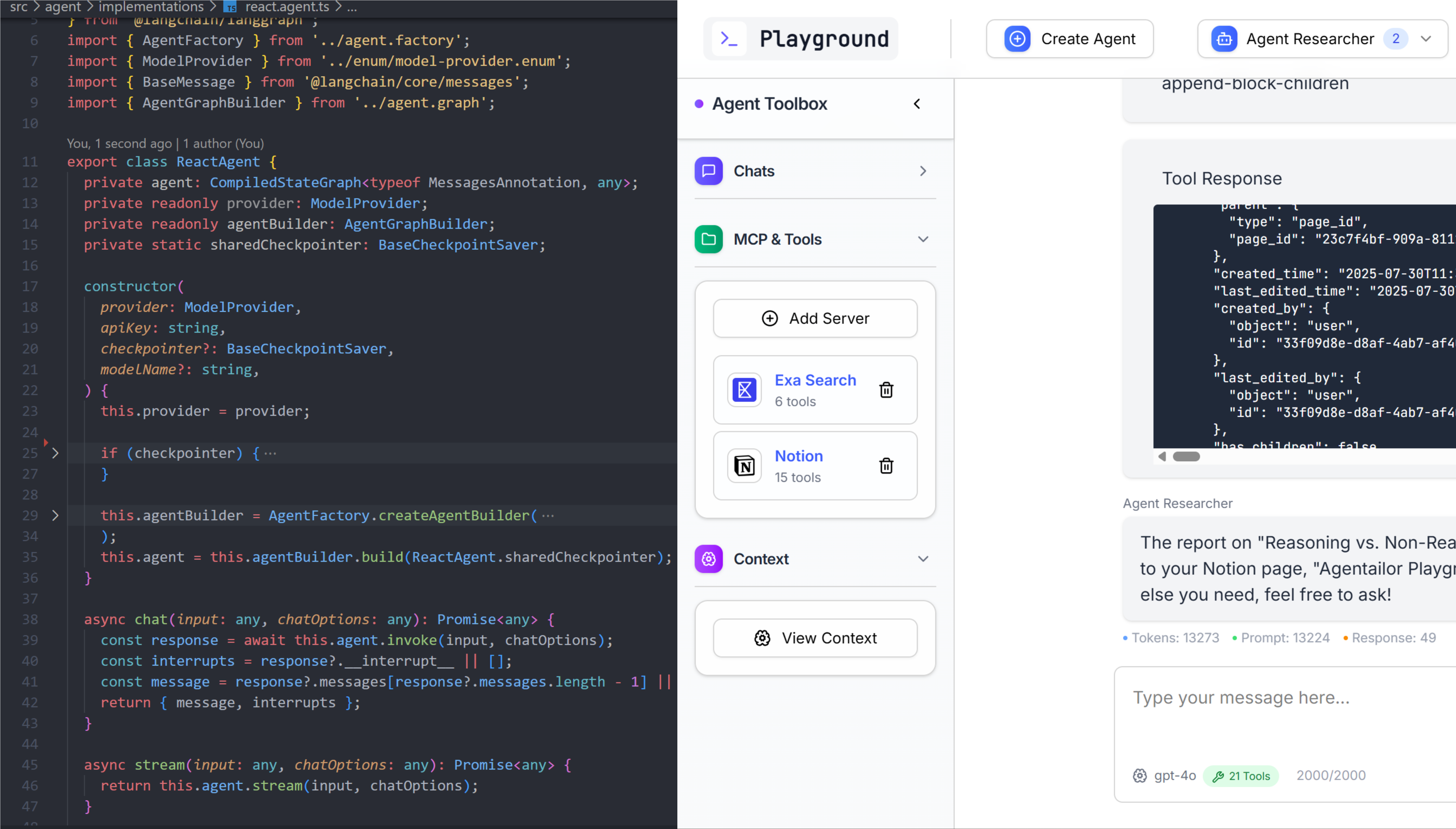

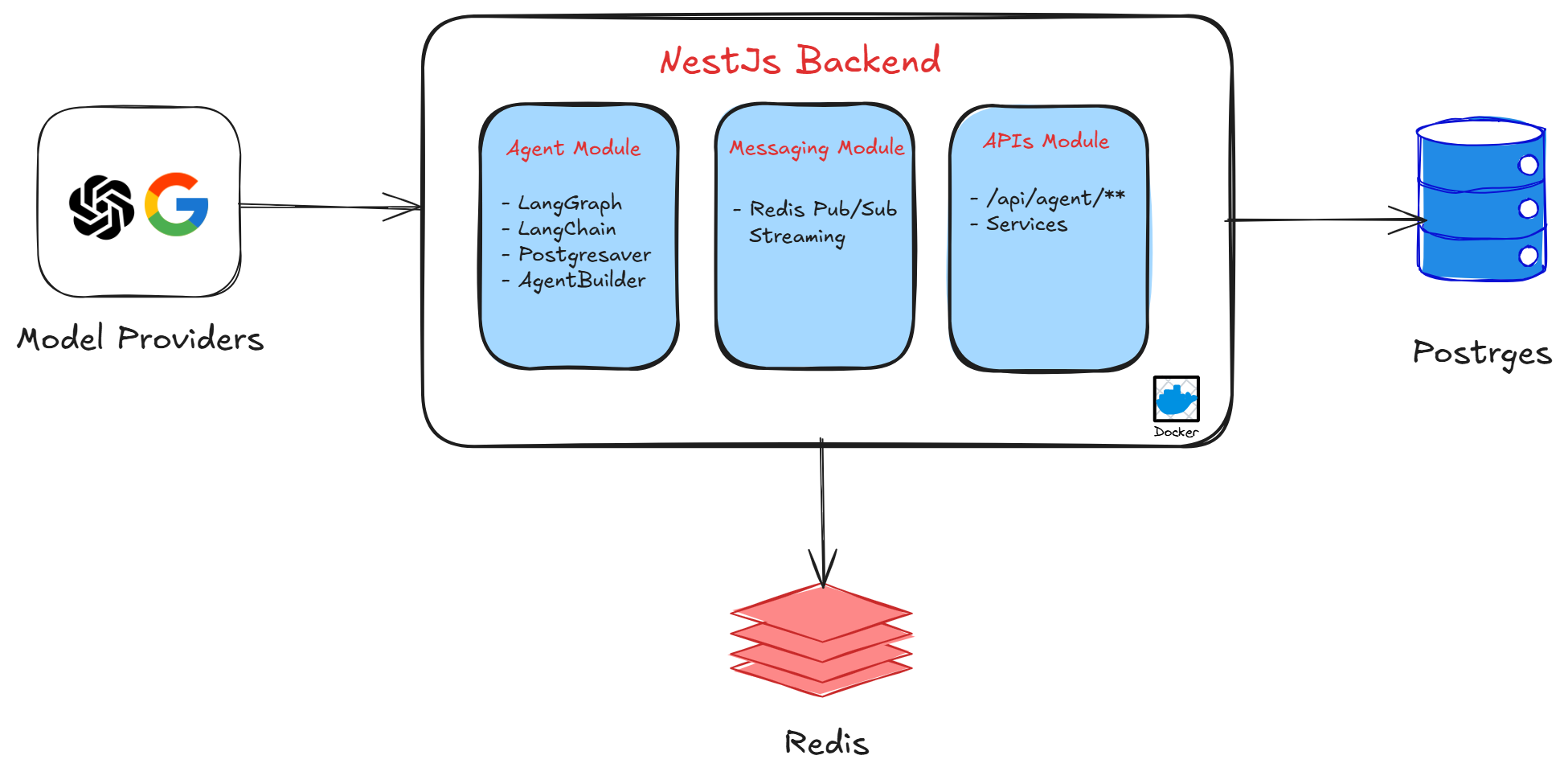

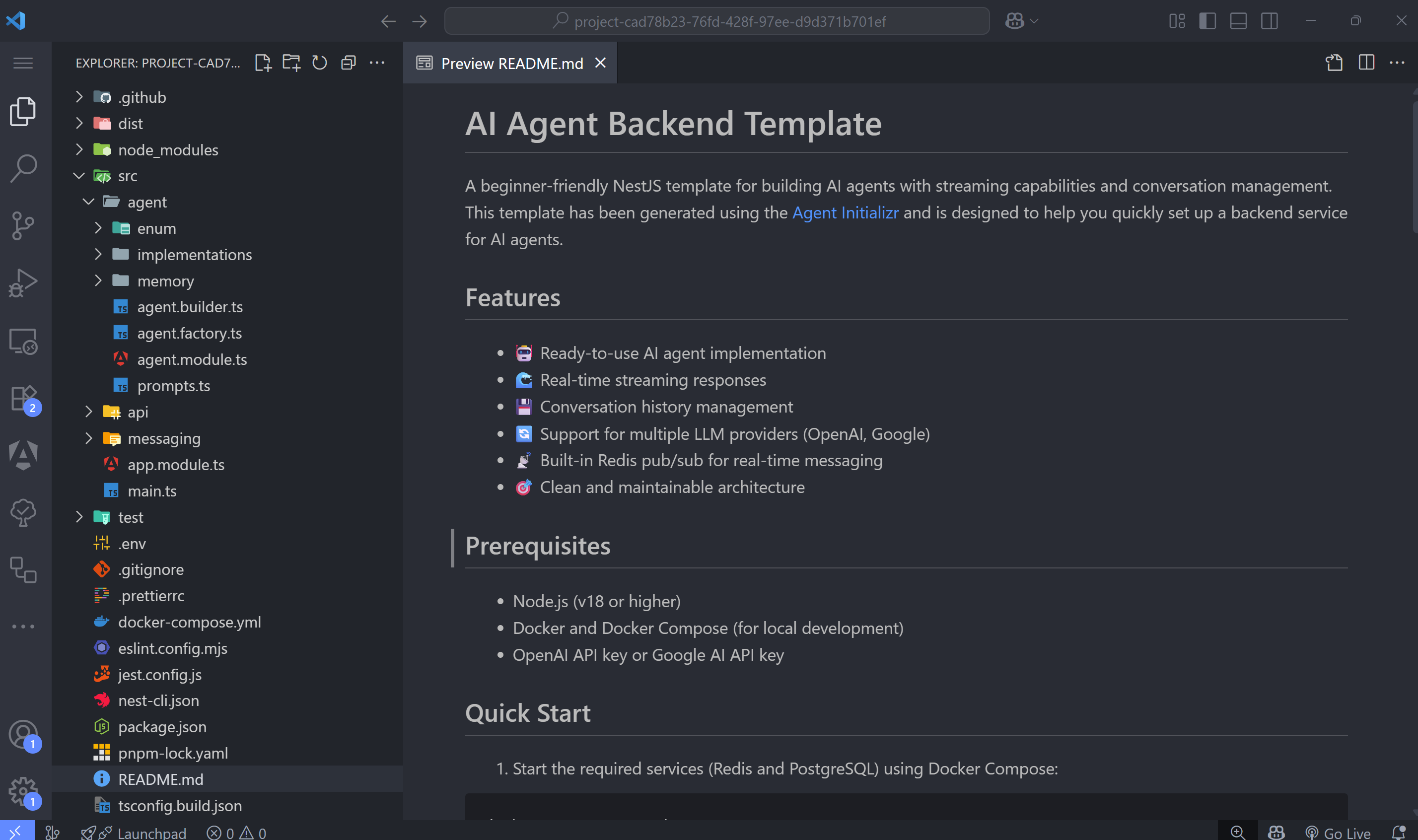

Before writing a single line of Playground-specific logic, I generated the backend using Agent Initializr.

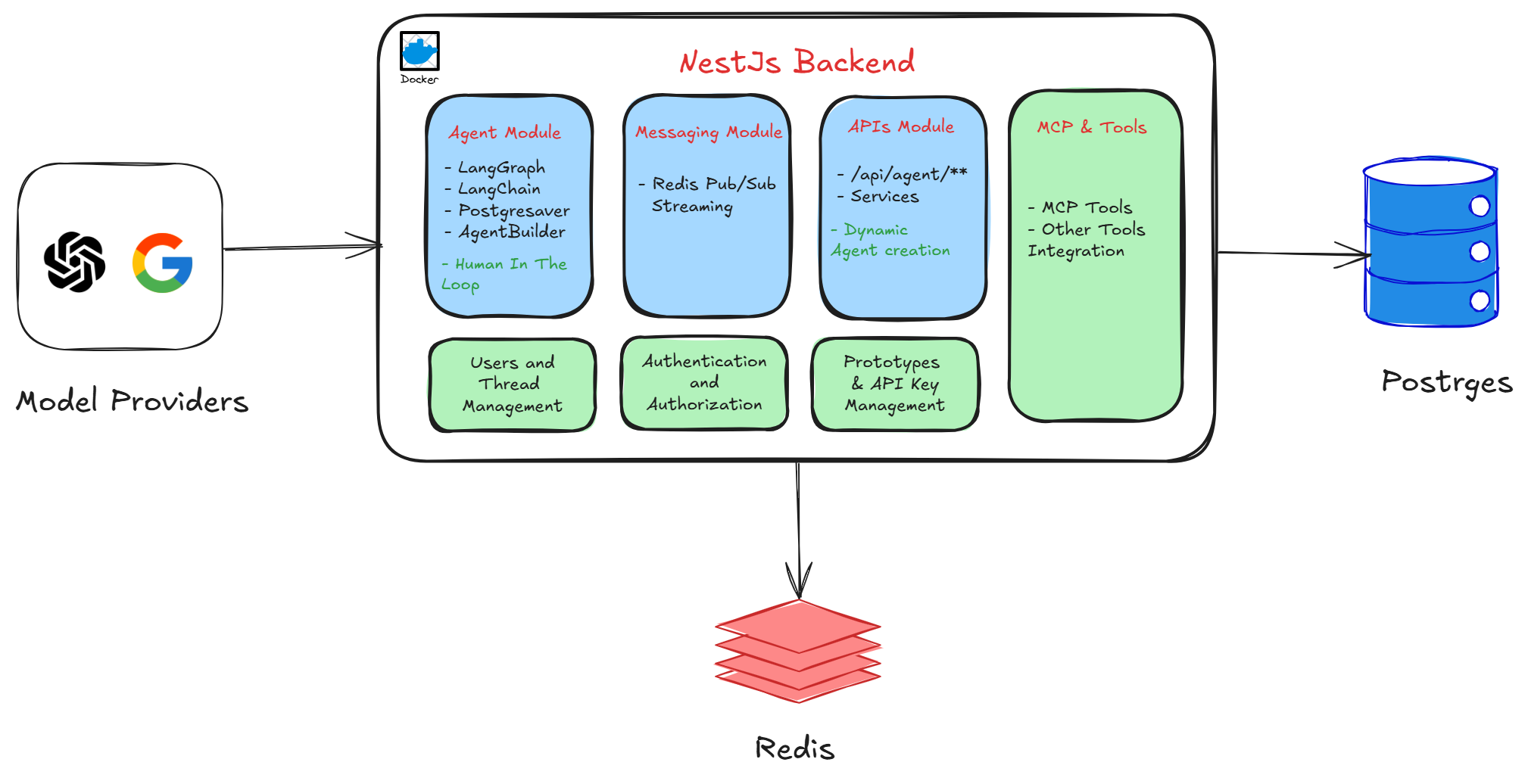

In less than a minute, I had a production-ready NestJS project with a clean, layered architecture ready for extension.

It came pre-configured with:

- A

docker-compose.ymlspinning up PostgreSQL and Redis for local dev. - Prebuilt endpoints like:

POST /api/agent/chat– send messages to the agentGET /api/agent/stream– real-time streaming responsesGET /api/agent/history/:threadId– fetch past conversations

This scaffold didn’t just save me time, it gave me a battle-tested starting point so I could focus 100% on the Playground’s unique features instead of boilerplate.

In fact if all you need is a basic AI agent, you could use this scaffold as-is and be up and running in no time.

Turning the Scaffold into the Playground

The Agent Initializr gave me a solid AI agent backend out of the box. From there, I layered on the Playground-specific features that turned it into a real SaaS product:

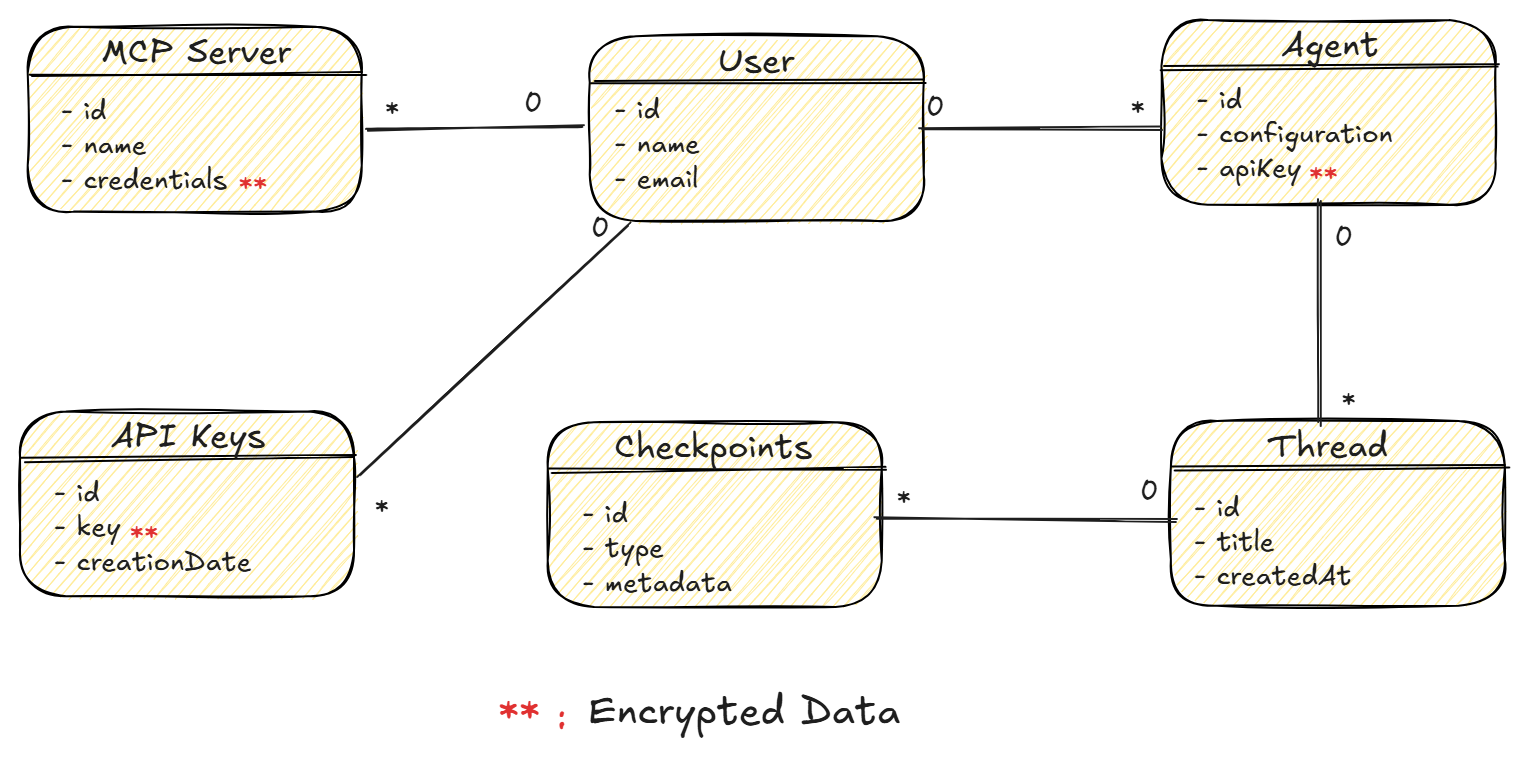

1. Multi-Tenancy & Authentication

- Integrated Auth0 JWT authentication

- Implemented

usersto scope data per tenant. - Added a GlobalAuthGuard:

/api/v1/*accepts API keys. These APIs are used for public access to agents.- All other routes require JWT.

async canActivate(context: ExecutionContext): Promise<boolean> {

const request = context.switchToHttp().getRequest();

const { path, method } = request;

// Other logics ...

if (path.startsWith('/api/v1/')) {

const apiKey = this.extractApiKey(request);

if (apiKey) {

const user = await this.apiKeysService.validateApiKeyAndGetUser(apiKey);

if (user) {

request.user = user;

return true;

}

}

}

// The super.canActivate call triggers the standard JWT validation from NestJS

const isJwtValid = await super.canActivate(context);

if (!isJwtValid) {

return false;

}

return true;

}

Key change from scaffold: The generated template had no tenant separation or auth, these were built on top. Below is a simplified class diagram showing the key entities and their relationships:

2. Prototype Management & Model Configs

- Added

Prototypeentities so each user can store:- Model provider (OpenAI, Google Gemini, etc.), the model name, etc

- Provider API key (stored encrypted).

- Built endpoints to create/update/delete prototypes.

Why: This allows each developer to run agents with their own keys and configurations, without leaking credentials.

3. Secure API Key System

- Created an API keys module with:

- Secure key generation (using

crypto) - Hashing before storage

- One-time display on creation

- Secure key generation (using

- API keys enable public API access to agents under

/api/v1/*routes.

4. Persistent Memory with Postgres Checkpointing

- Used the provided LangGraph PostgresSaver for persistent memory.

- Each thread is tied to a

prototypeIdand scoped per user.

Benefit: Conversations survive restarts and can be retrieved at any time.

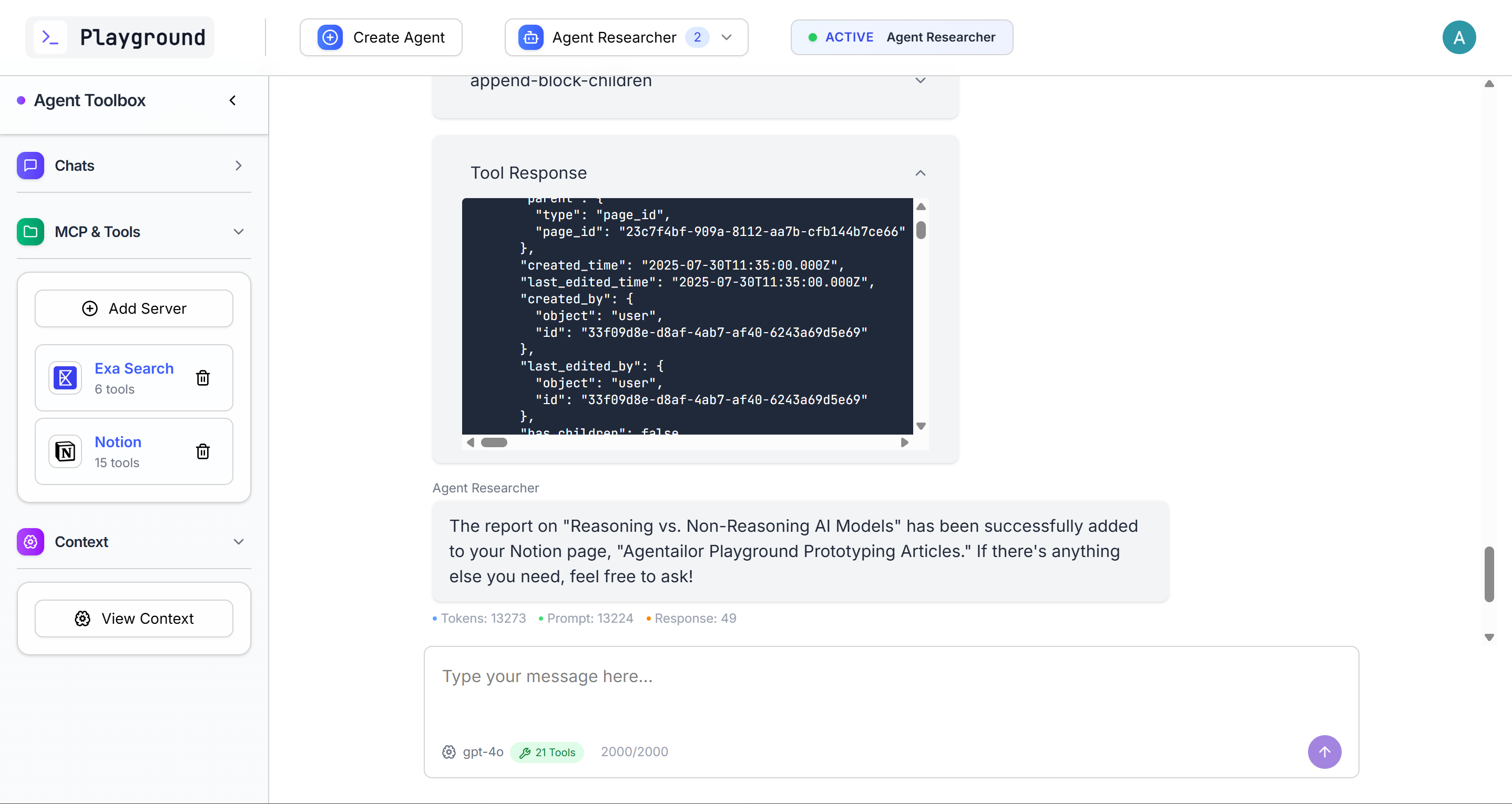

5. Tools & MCP Integration

- Integrated Smithery MCP registry to let users attach external tool servers (Notion, Slack, GitHub, etc.) using the MCP protocol.

- Cached MCP tool to avoid repeated lookups.

- Individual tool integration is still possible.

async findToolsByServers(

serverNames: string[],

user: AuthenticatedUser,

forceRefresh = false,

): Promise<DynamicStructuredTool[]> {

// Check cache first

const cachedTools = await this.mcpToolsCacheService.getTools(serverNames, user, forceRefresh);

if (cachedTools) {

return cachedTools;

}

// Other Logics...

const mcpServers: Record<string, any> = {};

const baseUrl = this.configService.get<string>('SMITHERY_API_URL') || 'https://server.smithery.ai';

for (const server of servers) {

const serverInfo = {

url: `${baseUrl}/${server.name}/mcp`,

authProvider: new BrowserOAuthProvider(server.id, server.name, user),

};

mcpServers[server.id] = serverInfo;

}

// Using MultiServerMCPClient LangGraph MCP adapters package

const client = new MultiServerMCPClient({mcpServers});

const mcpTools = await client.getTools();

// Cache the fetched tools

this.mcpToolsCacheService.cacheTools(serverNames, user, mcpTools);

return mcpTools;

}

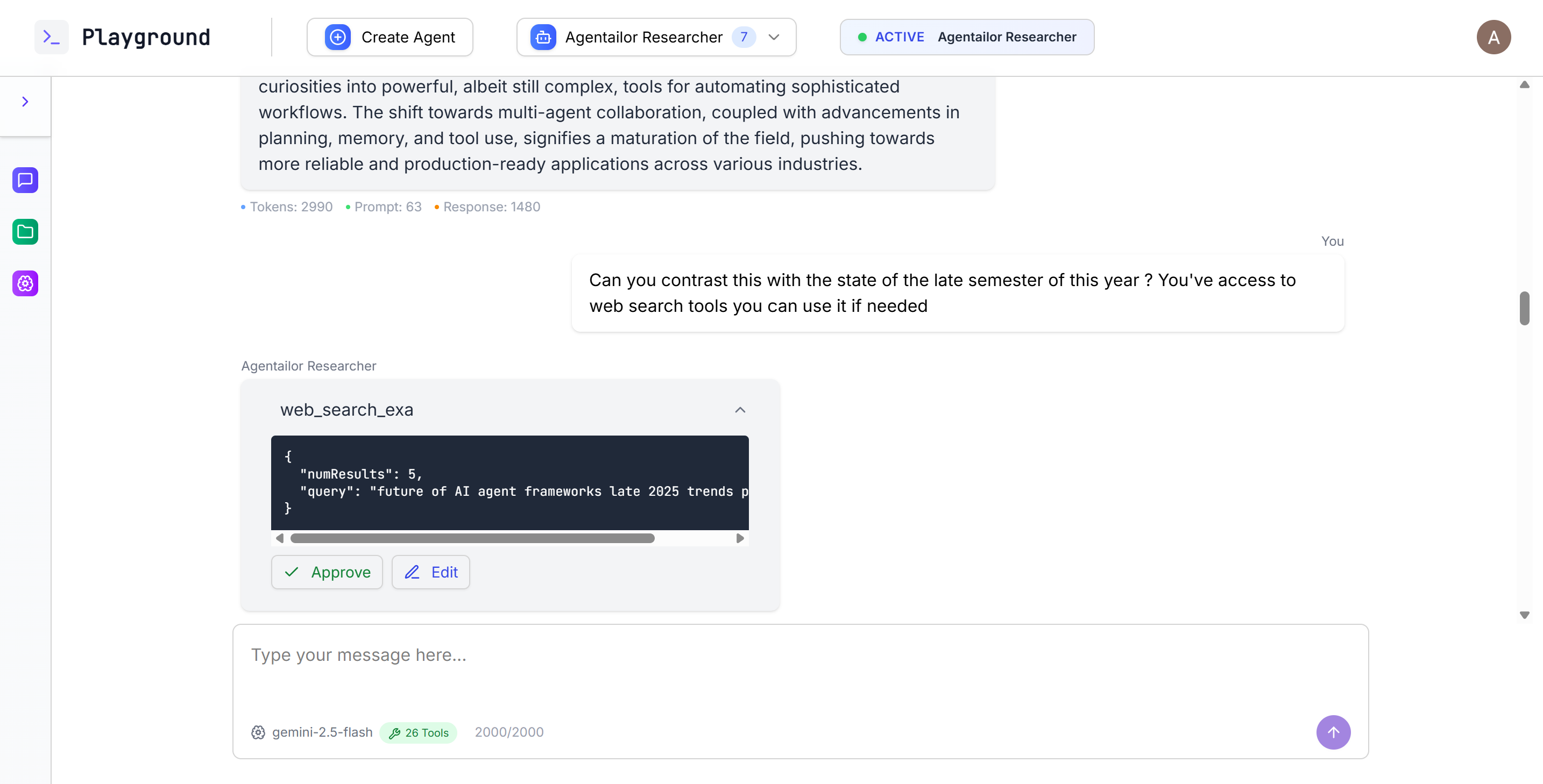

6. Added Human in the Loop Support

- Implemented a Human in the Loop system for calling MCP tools using LangGraph interrupts.

- Allows human reviewers to validate or edit agent outputs before invoking a tool.

Tool call validation in Playground

7. Thread & Agent Execution Management

- Built endpoints to: list all threads for a prototype, send messages to an agent, and retrieve thread history.

- Extended the scaffolded

AgentServiceto: decrypt prototype keys on the fly, load MCP tools dynamically per request, and track token usage and tool interrupts in responses.

The list of the api can be found in the Playground API documentation. Below is a snippet of what happens when you chat with an agent:

async chatWithAgent(

prototypeId: string,

user: AuthenticatedUser,

message: string,

options?: ChatOptions,

): Promise<ChatResponse> {

// Load prototype and decrypt key

const prototype = await this.prototypesService.getPrototype(prototypeId, user);

const decryptedKey = this.encryptionService.decrypt(prototype.apiKey);

// Load tools

const tools = await this.mcpToolsService.findToolsByServers(prototype.mcpServers, user);

// Call agent with decrypted key and tools

return this.agentService.chatWithAgent(prototype, decryptedKey, message, tools, options);

}

8. Database Migration Setup and Production-Readiness Touches

- Added migrations for all new entities (prototypes, API keys, MCP servers, users).

- CORS configuration (open in dev, restricted in prod).

- Environment variable validation for all critical configs.

Result:

In less than two weeks, I went from a generic AI agent scaffold to a fully functional, multi-tenant AI SaaS backend — all without reinventing the boilerplate.

The Infrastructure and deployment

For the sake of moving fast I used the following services to deploy the Playground:

- Render : Used for deploying both the backend and the frontend. I chose it for its simplicity and seamless Git-based workflow, which automates builds and deployments on every push.

- Neon : For the Postgres database, its serverless nature means I don't have to worry about provisioning or scaling, which is perfect for an MVP.

- Auth0: Handled all user authentication. Integrating a professional identity service like Auth0 saved me days of work.

- Upstash: Provided a managed Redis instance. It's fast, reliable, and essential for the Pub/Sub messaging system that powers the real-time streaming of agent responses.

Result: This setup allows me to focus on rapid development and deployment without worrying about infrastructure management.

From Scaffold to Live Product

By starting with Agent Initializr instead of a blank folder, I skipped straight past the boilerplate and spent my time building only what mattered for the Playground.

Timeline from idea to production:

- Day 0: Defined the core goal — a multi-tenant AI prototyping backend.

- Day 1-2: Generated the NestJS + LangGraphJS scaffold with Agent Initializr (30 seconds). And Created a simple React + TailwindCSS frontend to interact with the backend.

- Days 3–4: Added authentication, prototype management, and encrypted API key storage.

- Days 5–7: Integrated MCP tools, Human In The Loop, and thread management.

- Days 8–10: Built and tested all public API endpoints for agents, history, and tools.

- Days 11–12: Final tweaks, deployment, and security hardening.

- Day 13–14: Live, tested with real users.

Why this matters:

The Playground exists as proof that a real-world AI SaaS can be built from Agent Initializr’s foundation in under two weeks, production features included.

Lessons for Developers

The Playground isn’t just an app I built — it’s a case study in how much faster you can move when you start with a strong foundation.

The key lessons for me:

- Separate foundation from differentiation – The heavy lifting (auth, API structure, streaming, DB integration) doesn’t need to be reinvented.

- Build in layers – Start with a scaffold, then add features in small, testable increments.

- Keep production in mind early – Multi-tenancy, encryption, and migrations weren’t afterthoughts; they were built alongside the core logic.

- Validate before you overbuild – In two weeks, I had a live product that proved the concept without locking me into months of work.

- Use AI Tools if you need – Don’t hesitate to leverage existing AI tools to accelerate development, in my case I already had GitHub Copilot installed, so I didn’t hesitate, the Agent Mode is quite good.

For anyone working in AI SaaS, that balance of speed and structure can be the difference between shipping and stalling.

The Logical next steps for Agent Playground

- Rate Limiting – Implement rate limiting on API keys to prevent abuse.

- Monitoring & Logging – Add monitoring and logging to track usage patterns and improve the product.

Conclusion

The Playground runs on the exact same foundation that Agent Initializr generates, nothing hidden, nothing custom-baked into the scaffold just for me.

If you’re building something similar, whether it’s an internal tool, a client-facing MVP, or a production AI service, starting with a scaffold like this can save you weeks of setup.

The code I used to start is free to generate in 30 seconds. What you do with it after that is entirely up to you.

What to do next ?

- Want to build something similar? Start with Agent Initializr to get a solid foundation in minutes.

- Need help? Join the Agentailor community on Discord to connect with other builders and get support.

- Have any questions or feedback? Reach out on X — I’d love to hear from you!

- Want to read more about Agent Initializr? Check out How to Build a Fullstack AI Agent with LangGraphJS and NestJS (Using Agent Initializr)

Agent Briefings

Level up your agent-building skills with weekly deep dives on MCP, prompting, tools, and production patterns.